The UK’s digital landscape underwent its most significant transformation yet on Friday, July 25, 2025. The Online Safety Act 2023, seven years in the making, is now being fully enforced by Ofcom (the UK’s communications regulator). These new rules fundamentally change how British citizens access and interact with online content, with the primary goal of protecting children from harmful material.

What Is the Online Safety Act?

The Online Safety Act is comprehensive legislation designed to make the UK “the safest place in the world to be online.” The law places legal responsibilities on social media companies, search engines, and other online platforms to protect users—especially children—from illegal and harmful content.

The Act applies to virtually any online service that allows user interaction or content sharing, including social media platforms, messaging apps, search engines, gaming platforms, dating apps, and even smaller forums or comment sections.

Origins of the Online Safety Act

The journey to the UK Online Safety Act was a long and complex one, beginning with the Government’s 2019 Online Harms White Paper. This initial proposal outlined the need for a new regulatory framework to tackle harmful content. The draft Online Safety Bill was published in May 2021, sparking years of intense debate and scrutiny in Parliament. Public pressure, significantly amplified by tragic events and tireless campaigning from organizations like the Molly Rose Foundation, played a crucial role in shaping the legislation and accelerating its passage. After numerous amendments and consultations with tech companies, civil society groups, and child safety experts, the bill finally received Royal Assent on October 26, 2023, officially becoming the Online Safety Act.

Who Must Comply with the Online Safety Act?

This new UK internet law applies to a vast range of online services accessible within the UK. The core focus is on platforms that host user-generated content (known as user-to-user services) and search engines. Ofcom, the regulator, has established a tiered system to apply the rules proportionally. Category 1 services are the largest and highest-risk platforms like Meta (Facebook, Instagram), X (formerly Twitter), and Google, which face the most stringent requirements. Category 2A covers search services, and Category 2B includes all other in-scope services that don’t meet the Category 1 threshold. This includes smaller social media sites, online forums, and commercial pornographic websites. Notably, services like email, SMS, and content on recognized news publisher websites are exempt from these specific regulations.

The Changes That Started July 25, 2025

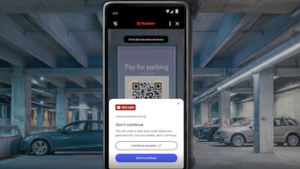

Mandatory Age Verification for Adult Content

The most immediate change for consumers is the replacement of simple “Are you 18?” checkboxes with robust age verification. As Oliver Griffiths from Ofcom explained: “The situation at the moment is often ridiculous because people just have to self-declare what their birthday is. That’s no check at all.”

There are three main ways that Brits will now be asked to prove their age:

Age Estimation Methods:

- Facial age estimation using approved third-party services like Yoti or Persona

- Email-based age verification that checks if your email is linked to household utility bills

Information Verification:

- Bank or mobile provider checks where these institutions confirm your adult status

- Simple computer verification that gives websites a “yes” or “no” without sharing personal details

Document Verification:

- Official ID verification requiring passport or driver’s license, similar to showing ID at a supermarket

Important Dates and Compliance Deadlines

- October 2023: The Online Safety Act receives Royal Assent and becomes law.

- November 2023 – May 2025: Ofcom undertakes three phases of consultation, developing the detailed rules and codes of practice needed to enforce the Act.

- July 25, 2025: The first key enforcement date for the Online Safety Act 2025. Ofcom begins enforcing rules on illegal content, with a primary focus on services hosting pornography to implement robust age assurance measures.

- Late 2025: The deadline for all in-scope services to complete their first illegal content risk assessments.

- Early 2026: Expected deadline for larger platforms (Category 1) to comply with duties related to protecting children from legal but harmful content.

- Beyond 2026: Ongoing compliance cycles, with platforms required to submit regular transparency reports to Ofcom detailing the safety measures they have in place.

Stricter Content Controls for Children

Platforms must now actively prevent children from accessing content related to suicide, self-harm, eating disorders, pornography, violent or abusive material, online bullying, dangerous challenges or stunts, and hate speech.

Social media platforms and large search engines must keep harmful content off children’s feeds entirely, with algorithms that recommend content required to filter out dangerous material.

Enhanced Platform Responsibilities

Online services must now provide clear and accessible reporting mechanisms for both children and parents, procedures for quickly taking down dangerous content, and identify a named person “accountable for children’s safety” with annual reviews of how they manage risks to children.

How to Comply with the Online Safety Act

- Conduct Detailed Risk Assessments: Platforms must proactively identify and evaluate the risks of illegal and harmful content appearing on their service, paying special attention to risks faced by children.

- Practice “Safety by Design”: This principle requires companies to build safety features directly into their services from the start, rather than treating safety as an afterthought. This includes systems to prevent harmful content from being recommended by algorithms.

- Implement Robust Age-Assurance: For services that host pornography or other age-restricted content, this means selecting and deploying effective age verification technologies to prevent children from gaining access. This is a key part of the porn law change UK citizens are now seeing.

- Publish Transparency Reports: Companies must regularly report to Ofcom and the public on the steps they are taking to manage risks and comply with the Online Safety Act.

- Appoint a UK Representative: Companies based outside the UK that are in scope of the Act must appoint a legal representative within the country to be accountable for compliance.

Ofcom’s enforcement will follow a proportionality principle, meaning the largest platforms with the highest reach and risk will face the most demanding obligations. Platforms are strongly advised to seek early legal and technical guidance to ensure they meet their specific duties under the new law.

The Scale of the Problem

The statistics that drove this legislation are shocking:

- Around 8% of children aged 8-14 in the UK visited an online porn site or app in a month

- 15% of 13-14-year-olds accessed online porn in a month

- Boys aged 13-14 are significantly more likely to visit porn services than girls (19% vs 11%)

- The average age children first see pornography is 13, with 10% seeing it by age 9

According to the Children’s Commissioner, half of 13-year-olds surveyed reported seeing “hardcore, misogynistic” pornographic material on social media sites, with material about suicide, self-harm, and eating disorders described as “prolific.”

Major Platforms Already Complying

Major websites like PornHub, X (formerly Twitter), Reddit, Discord, Bluesky, and Grindr have already committed to following the new rules. Over 6,000 websites hosting adult content have implemented age-assurance measures.

Reddit started checking ages last week for mature content using technology from Persona, which verifies age through uploaded selfies or government ID photos. X has implemented age estimation technology and ID checks, defaulting unverified users into sensitive content settings.

Privacy and Security: What You Need to Know

Many consumers worry about privacy implications of age verification, but the system has built-in protections:

- Adult websites don’t actually receive your personal information

- Age-checking services don’t learn what content you’re trying to view

- The process is compliant with data protection laws and simply gives websites a “yes” or “no”

- You remain anonymous with no link between your identity and online habits

Best Practices for Privacy:

- Choose facial age estimation when available (supported by over 80% of users)

- Avoid photo ID verification when possible to minimize data sharing

- Understand that verification status may be stored to avoid repeated checks

Enforcement: Real Consequences for Non-Compliance

Companies face serious penalties for non-compliance: fines of up to £18 million or 10% of global revenue (whichever is higher). For a company like Meta, this could mean a £16 billion fine.

In extreme cases, senior managers at tech companies face criminal liability and up to two years in jail for repeated breaches. Ofcom can also apply for court orders to block services from being available in the UK.

Ofcom has already launched probes into 11 companies suspected of breaching parts of the Online Safety Act and expects to announce new investigations into platforms that fail to comply with age check requirements.

The VPN Reality Check

While some might consider using VPNs to bypass age verification, Ofcom acknowledges this limitation but emphasizes that most exposure isn’t from children actively seeking harmful content: “Our research shows that these are not people that are out to find porn — it’s being served up to them in their feeds.”

As Griffiths explained: “There will be dedicated teenagers who want to find their way to porn, in the same way as people find ways to buy alcohol under 18. They will use VPNs. And actually, I think there’s a really important reflection here… Parents having a view in terms of whether their kids have got a VPN, and using parental controls and having conversations, feels a really important part of the solution.”

What This Means for Different Users

For Parents

You now have stronger tools and clearer accountability from platforms. Two-thirds of parents already use controls to limit what their children see online, and the new rules provide additional safeguards, though about one in five children can still disable parental controls.

For Adult Users

You may experience “some friction” when accessing adult material, but the changes vary by platform. On many services, users will see no obvious difference at all, as only platforms which permit harmful content and lack safeguards are required to introduce checks.

For Teens

Stricter age controls mean more restricted access to certain content, but platforms must also provide better safety tools and clearer reporting mechanisms.

The Bigger Picture: Managing Expectations

Industry experts and regulators emphasize that this is “the start of a journey” rather than an overnight fix. As one tech lawyer noted: “I don’t think we’re going to wake up on Friday and children are magically protected… What I’m hoping is that this is the start of a journey towards keeping children safe.”

Ofcom’s approach will be iterative, with ongoing adjustments and improvements. The regulator has indicated it will take swift action against platforms that deliberately flout rules but will work constructively with those genuinely seeking compliance.

Impact of the Online Safety Act on Users and Industry

The UK Online Safety Act is set to have a profound impact, bringing both significant benefits and notable challenges. For users, the primary benefit is a safer online environment, especially for children who will be better shielded from harmful content. Increased transparency from platforms will also empower users with more information about the risks on services they use. However, some users have raised concerns about data privacy related to age verification and the potential for the Act to stifle free expression and lead to over-removal of legitimate content.

For the tech industry, the law presents major operational hurdles. Compliance will require substantial investment in technology, content moderation, and legal expertise, with costs potentially running into the billions across the sector. Smaller platforms may struggle to meet the requirements, potentially hindering innovation and competition. The key takeaway is that the Online Safety Act marks a paradigm shift, moving from self-regulation to a legally enforceable duty of care, the full effects of which will unfold over the coming years as Ofcom’s enforcement ramps up.

Criticism and Future Developments

Some campaigners argue the measures don’t go far enough, with the Molly Rose Foundation calling for additional changes and some MPs wanting under-16s banned from social media completely. Privacy advocates worry about invasive verification methods, while others question effectiveness.

Parliament’s Science, Innovation and Technology Committee has criticized the act for containing “major holes,” particularly around misinformation and AI-generated content. Technology Secretary Peter Kyle has promised to “shortly” announce additional measures to reduce children’s screen time.

Looking Ahead

This week’s implementation represents “the most significant milestone yet” in the UK’s bid to become the safest place online. While the changes may not be immediately visible to all users, they establish crucial foundations for ongoing child safety improvements.

The Online Safety Act is designed to be a living framework that evolves with technology and emerging threats. Expect continued refinements, additional measures, and stronger enforcement as the system matures.

The Online Safety Act represents a fundamental shift in how online platforms operate in the UK. While it may introduce some inconvenience through age verification processes, the legislation prioritizes protecting children from genuine harm.

The success of these measures will depend on consistent enforcement, platform cooperation, and ongoing parental engagement. As one Ofcom official noted: “I think people accept that we’re not able to snap our fingers and do everything immediately when we are facing really deep-seated problems that have built up over 20 years. But what we are going to be seeing is really big progress.”

Stay informed about these changes, understand your verification options, and remember that these new safeguards are designed to protect the most vulnerable internet users while preserving legitimate access for adults.